It’s been a pretty interesting few weeks for those interested in AI image generation. Things are happening fast and it might even be a bit hard to keep up with all that is going on. First, there was the arrival of Stable Diffusion. That was version 1.4 of the model, BTW.

This week, Stability AI, the company behind Stable Diffusion, has released version 1.5 of the model on their Discord servers. To be quite clear, the 1.5 version of the model is not publicly available to download yet, but it is available for you to try out via their bot on the Discord server.

And if you’re getting ready to run over there and try it out, hang on! There’s more 🙂 Today I woke up to an e-mail from OpenAI indicating that their AI image generation system, Dall-e, can now do Outpainting!

What’s Outpainting, you ask?

It’s basically the ability to generate an image via Dall-e and then add detail to it by literally expanding the canvas — you just add to the image by painting out from the original image! Still confused? OK, let me show you an example step-by-step …

First, I generated an image with my favourite Discworld prompt. (I’m obsessed with trying to create a perfect Discworld image and I’ve been trying out a lot of prompts without much success, believe me 🤪)

This is the prompt: “Terry Pratchett’s Discworld with a single tall mountain in the middle sitting in dark space full of stars, Water is pouring down from the edges of the Discworld, Lights of one city with short buildings visible on the Discworld, Artstation, Massive scale, Highly detailed, Cinematic, Colorful. Digital art”.

Here’s three of the best (or what I consider the best), out of four, on running that prompt:

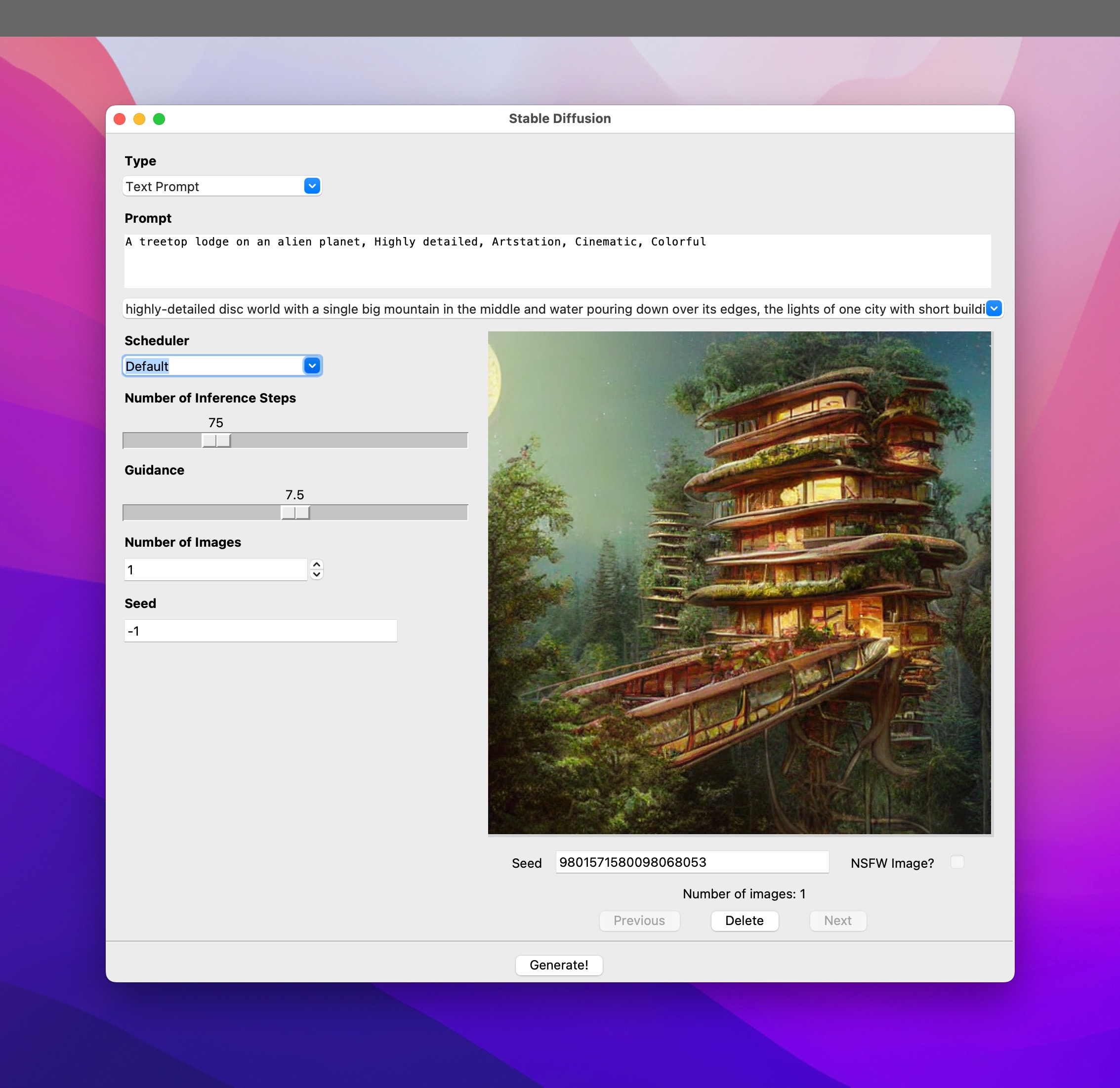

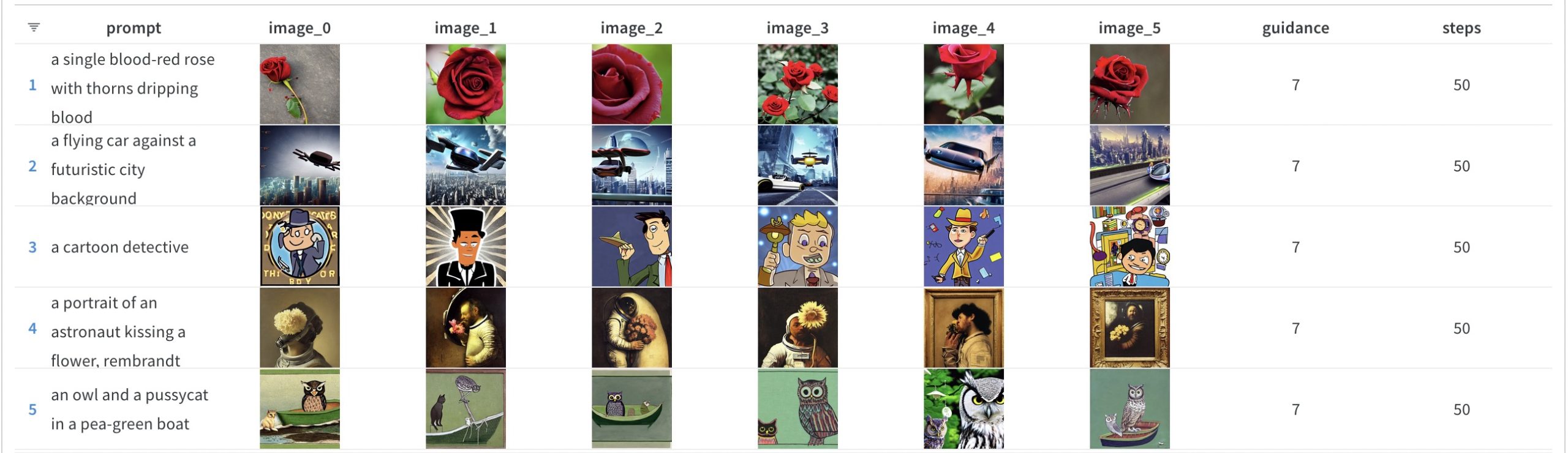

On a slight tangent (since this post is about Dall-e, after all) here’s my first three results for the same prompt from Stable Diffusion (just doing this since I’ve been talking about Stable Diffusion a lot lately):

Do note that none of the above images have been altered or enhanced in any way. They are simply as output by the AI for the same prompt.

But back to Dall-e. The above is what you get from any AI image generation system now — a single image which can be enhanced, modified, or fed back into the AI as a prompt (at least in some cases) to generate a new image. But that’s where it ends … till now. (Do I sound like an advert, or what? 🤪)

Now, with Dall-e Outpainting, you can continue the drawing. Let me show you how.

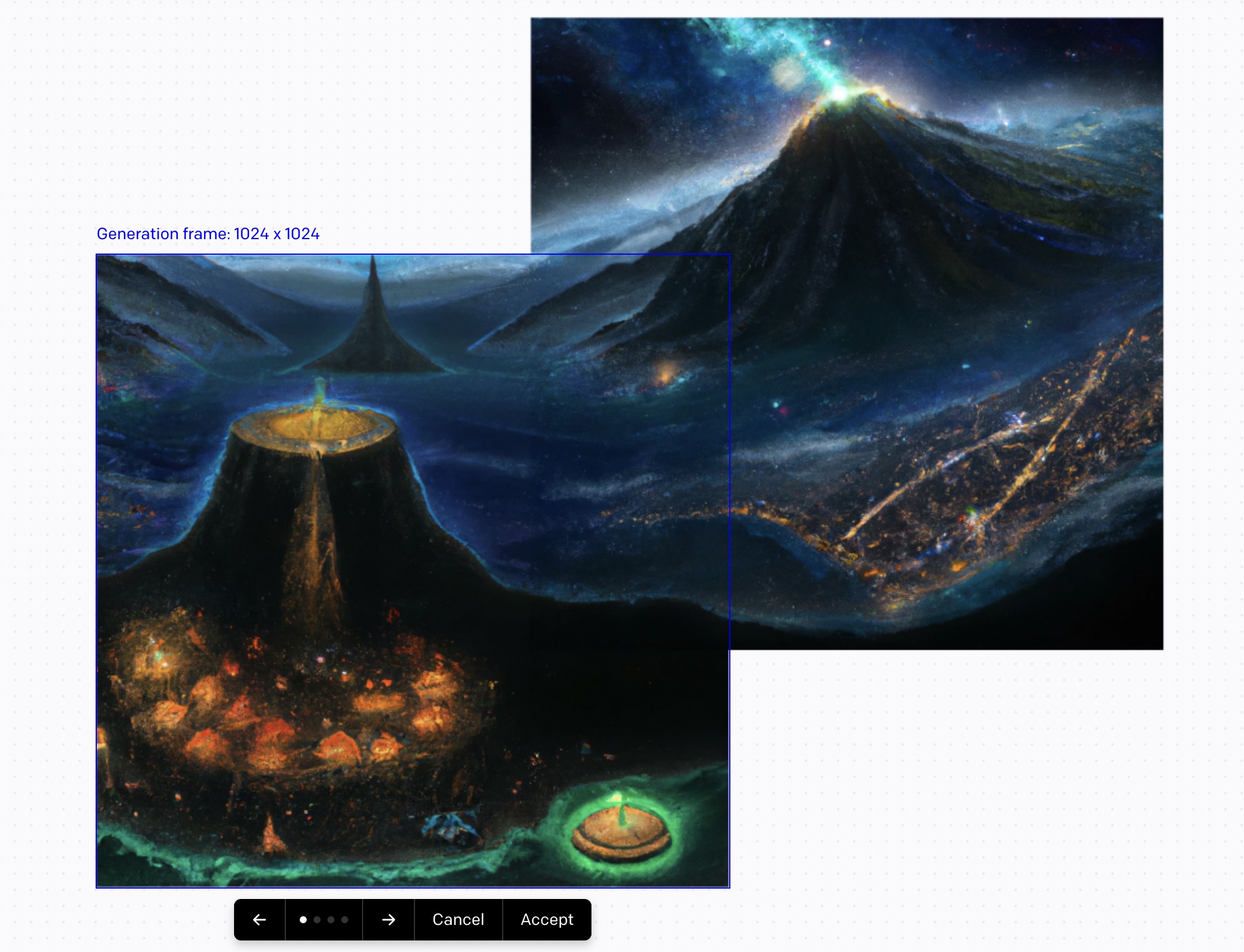

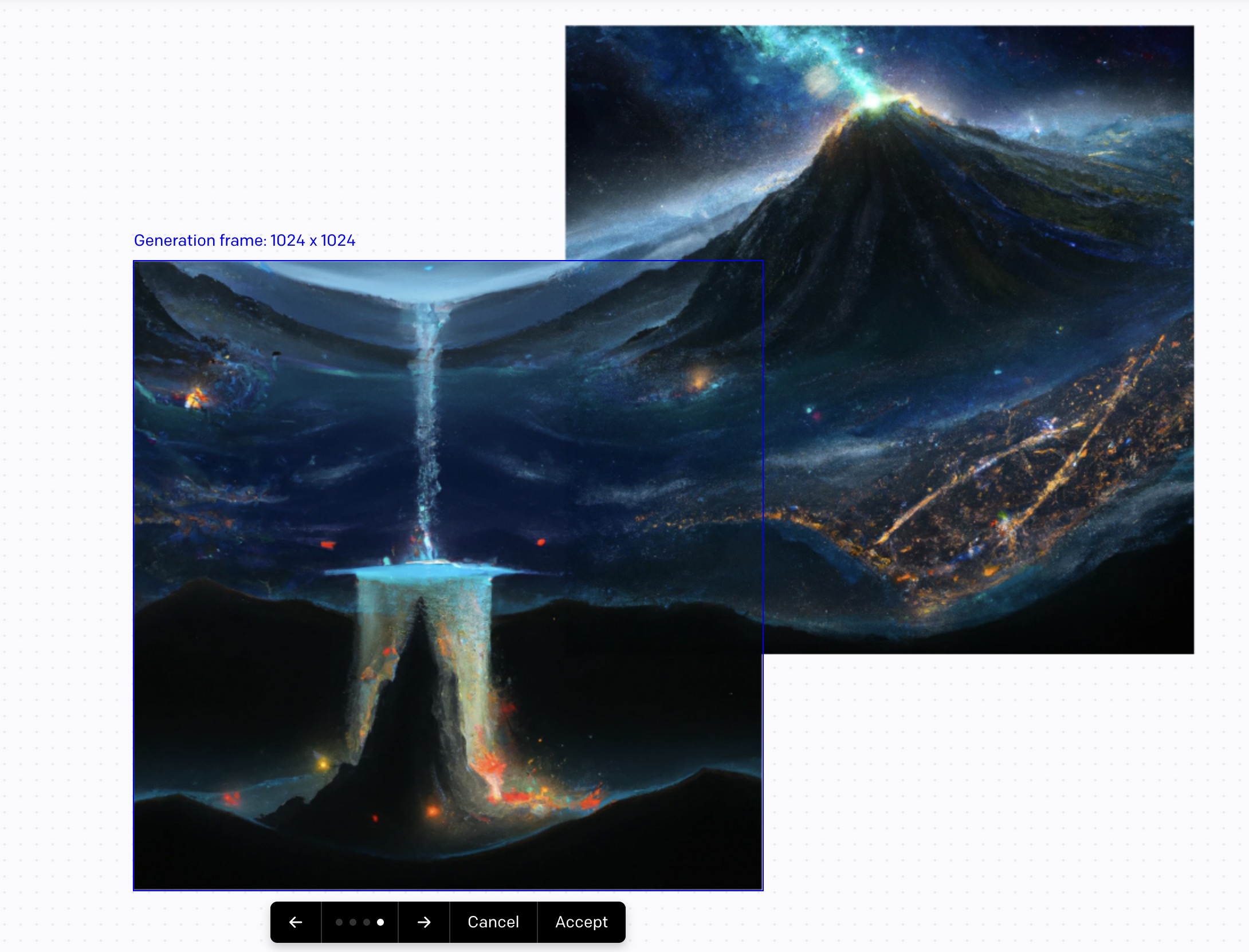

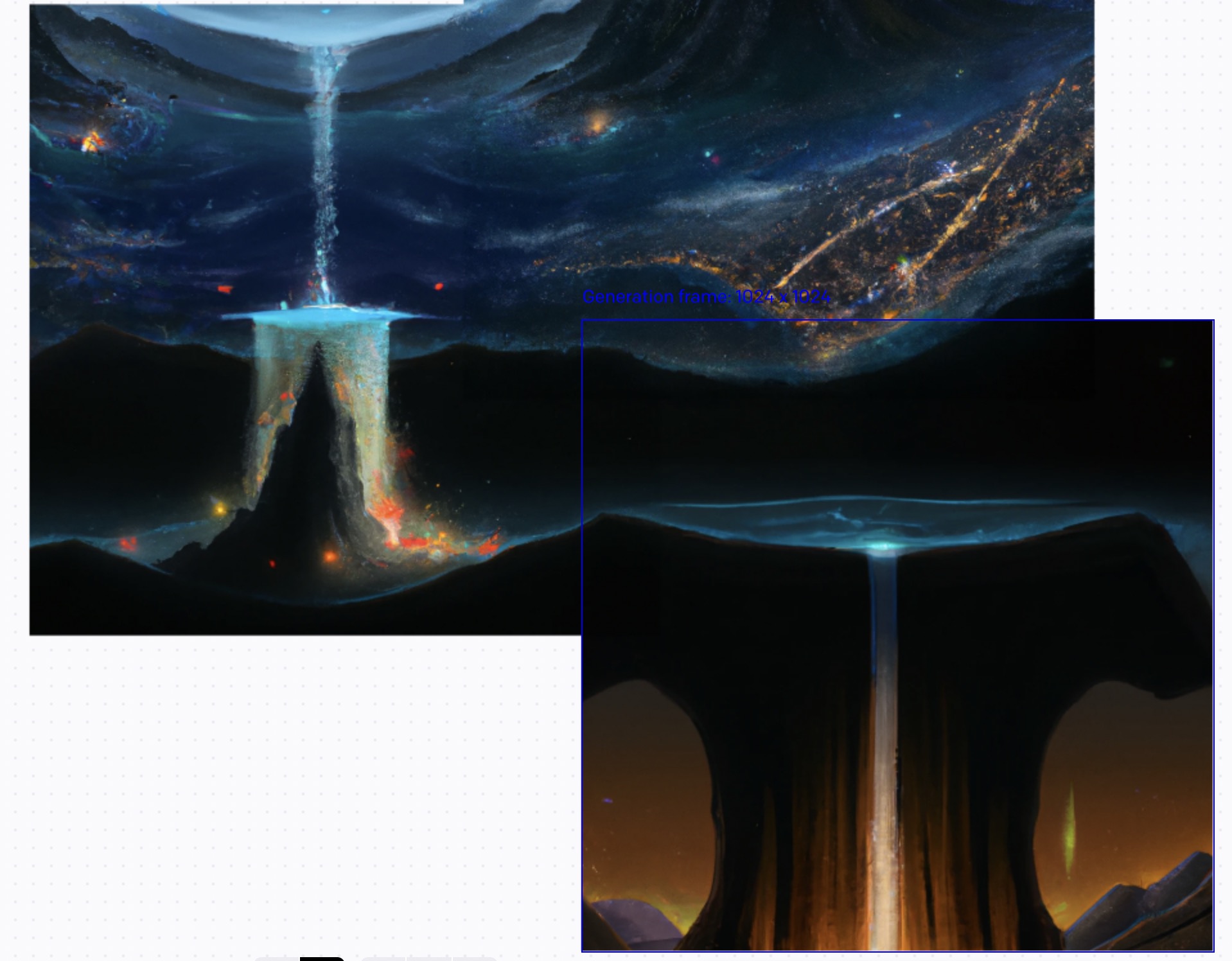

I generated a single image as usual with my prompt and then on the Outpainting editor, I can place a second square on the canvas to generate another portion of the image. Here’s what I got:

As you’ll notice, the second square seamlessly continues on from the original image! If you look at the bottom of the above images, you’ll notice that there’s a UI control which allows you to navigate between the four results (again, I chose only the three I liked for the screenshots) and to either cancel (to discard the generated results and possibly generate new ones) or to accept one of the choices.

If you accept a choice, that image gets placed on your canvas and now you are able to extend the image even further with another square 🙂

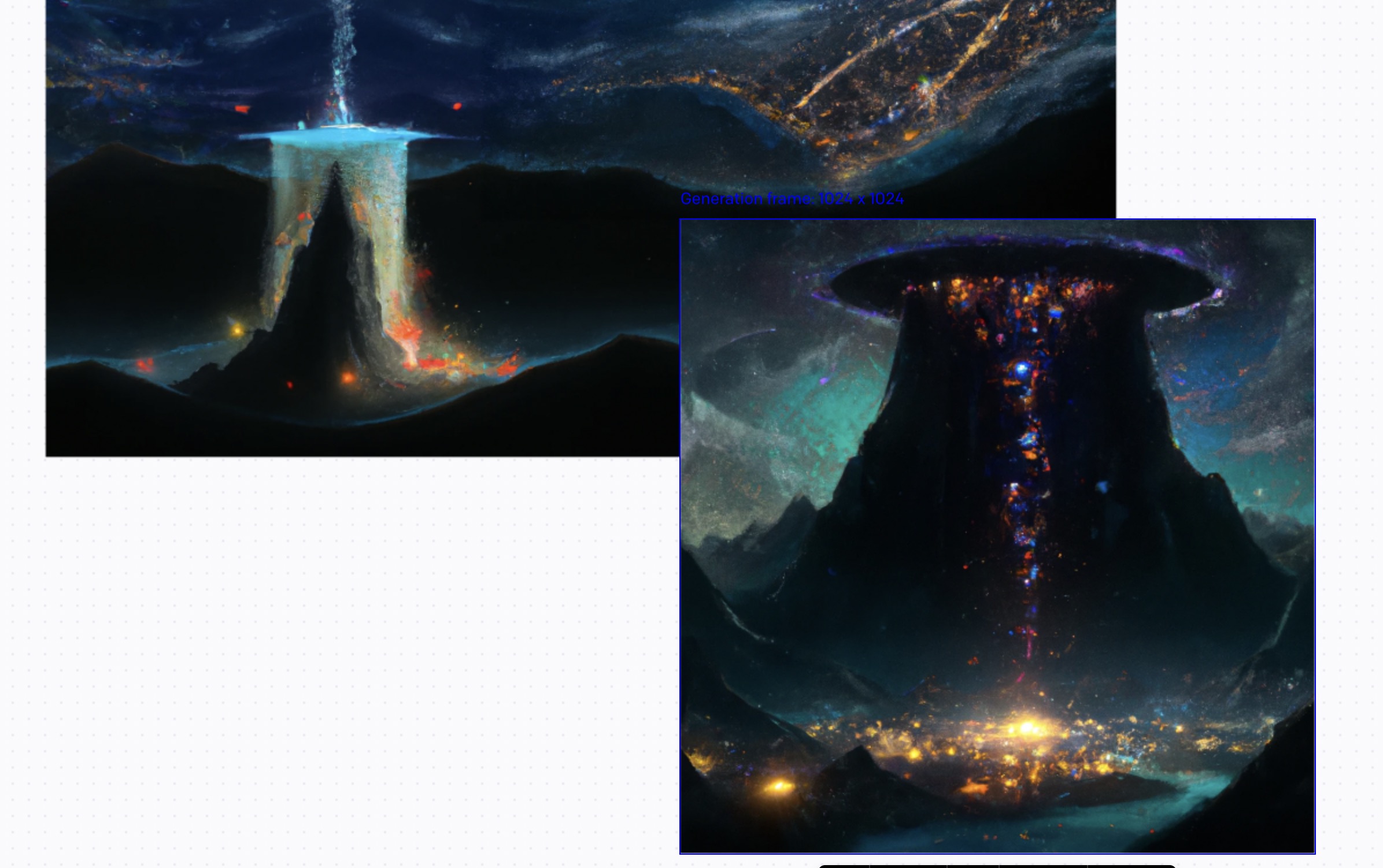

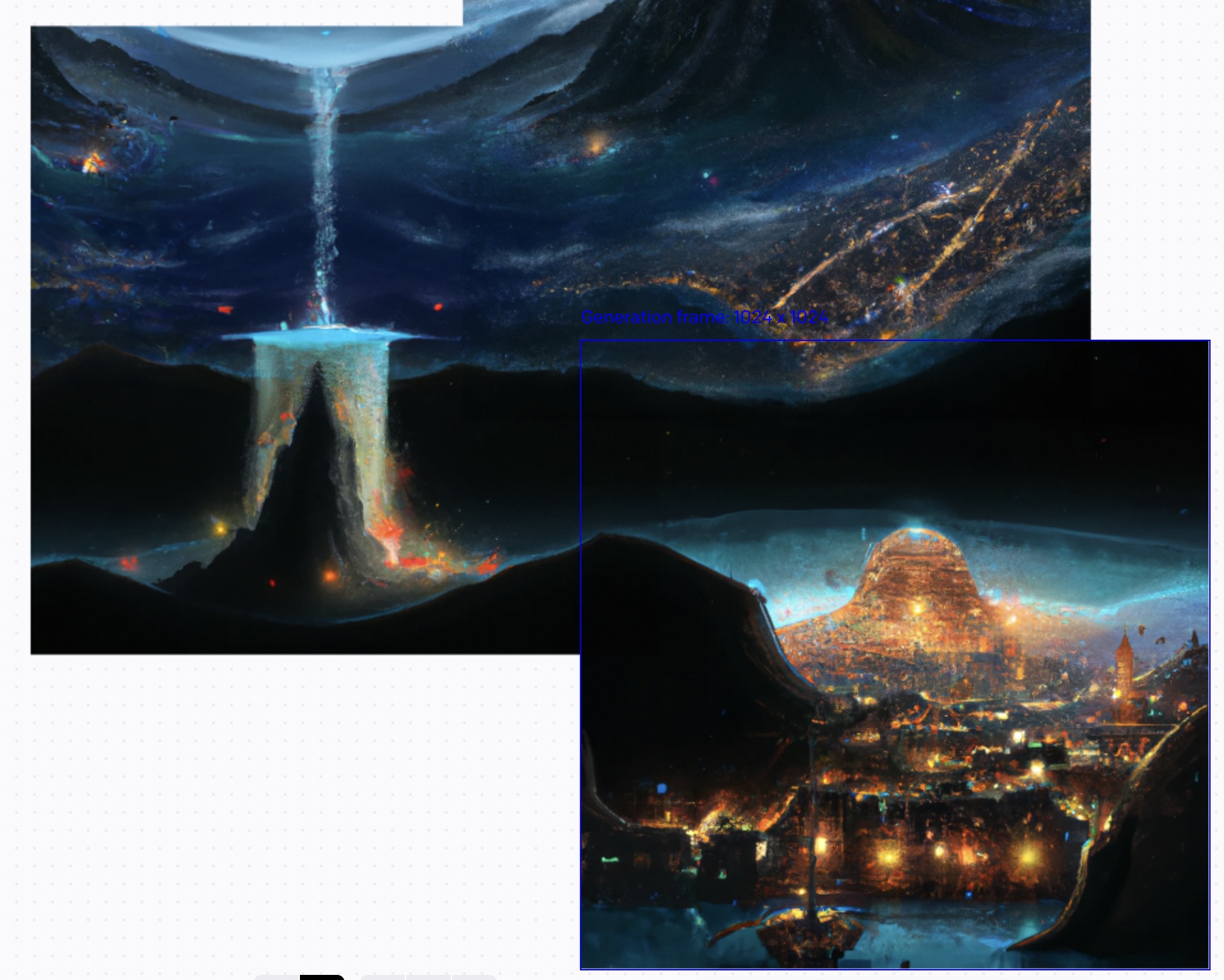

I wanted to see what would happen if the new square did not overlap the existing image at all. So I placed it in the bottom right corner. This is what I got:

Obviously, none of the new images were a seamless continuation of the existing image since there was no overlap between the images for the AI to interpolate how the extension should happen. I’m sure you understand this anyway, but I had to try to see what would happen …

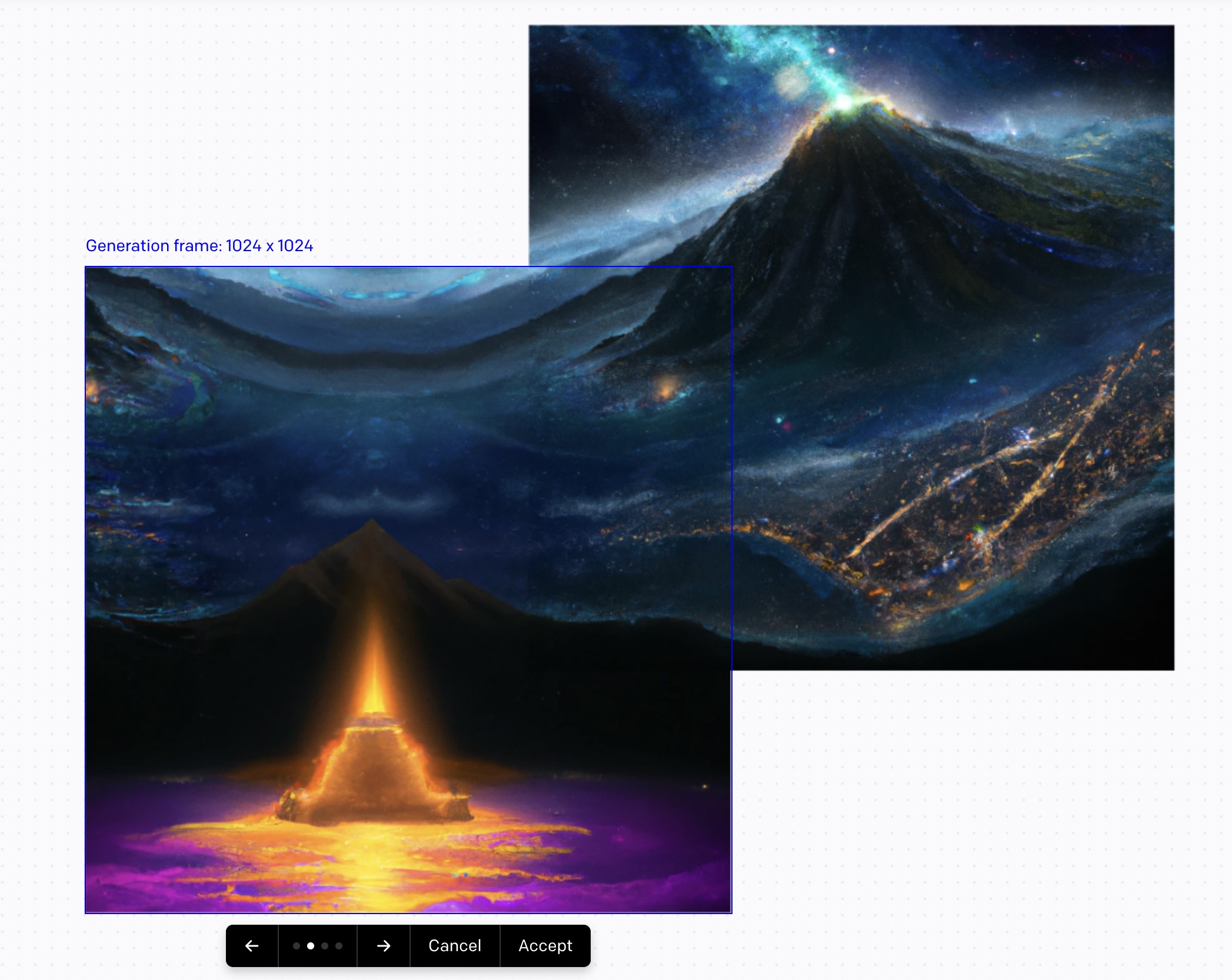

So I cancelled that and placed a new square like this:

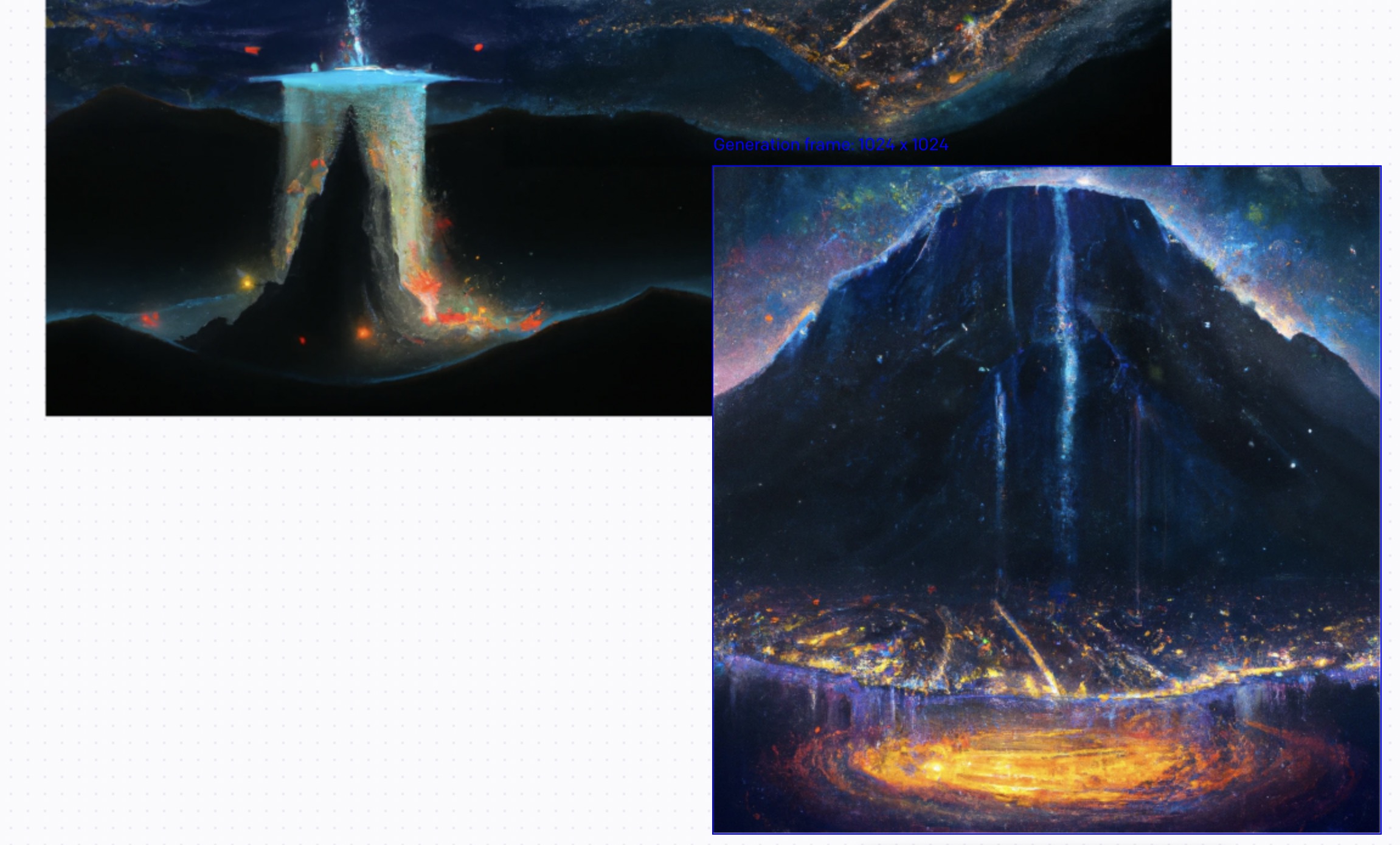

This time, I got the following results:

So this time, as should be obvious again, the continuation was seamless … as it should be.

Of course, since this is a huge landscape and the seam points were mostly for big features, I see this might not be seen as a big achievement. I must confess that I’m curious as to what would happen if you had a close up of something like a flower and tried to Outpaint from that. Would things be just as seamless?

Unfortunately, my Dall-e credits are limited and I wasn’t in the mood to try out more experiments … at least not today. Maybe the folks at OpenAI would give me some free credits so that I can carry on experimenting and writing about it? (Hint, hint 😛)

Do note that I used the same prompt for all three squares even though the prompt can be changed for each new square. I’d like to do more experimenting with that too to see what can be done.

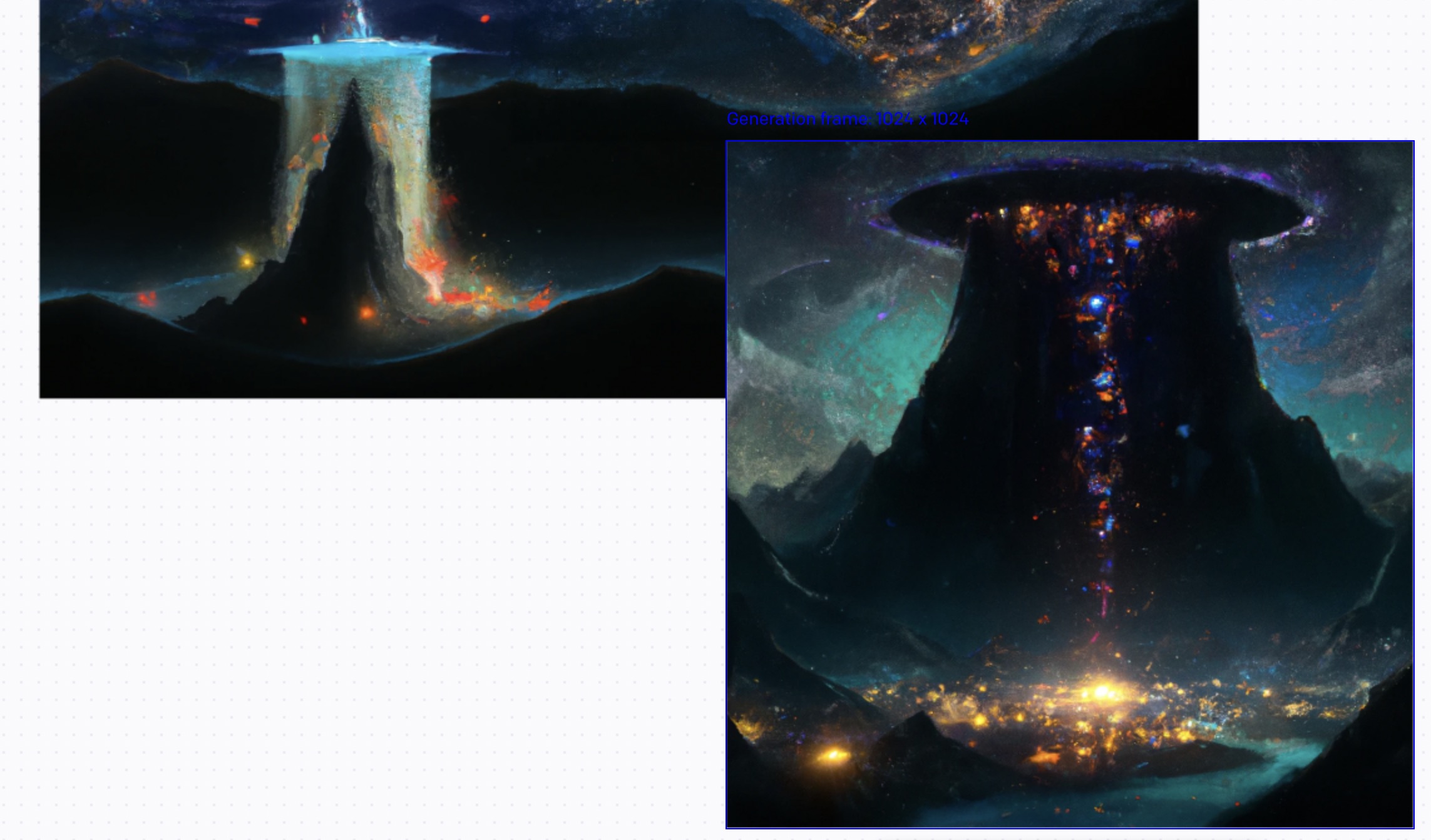

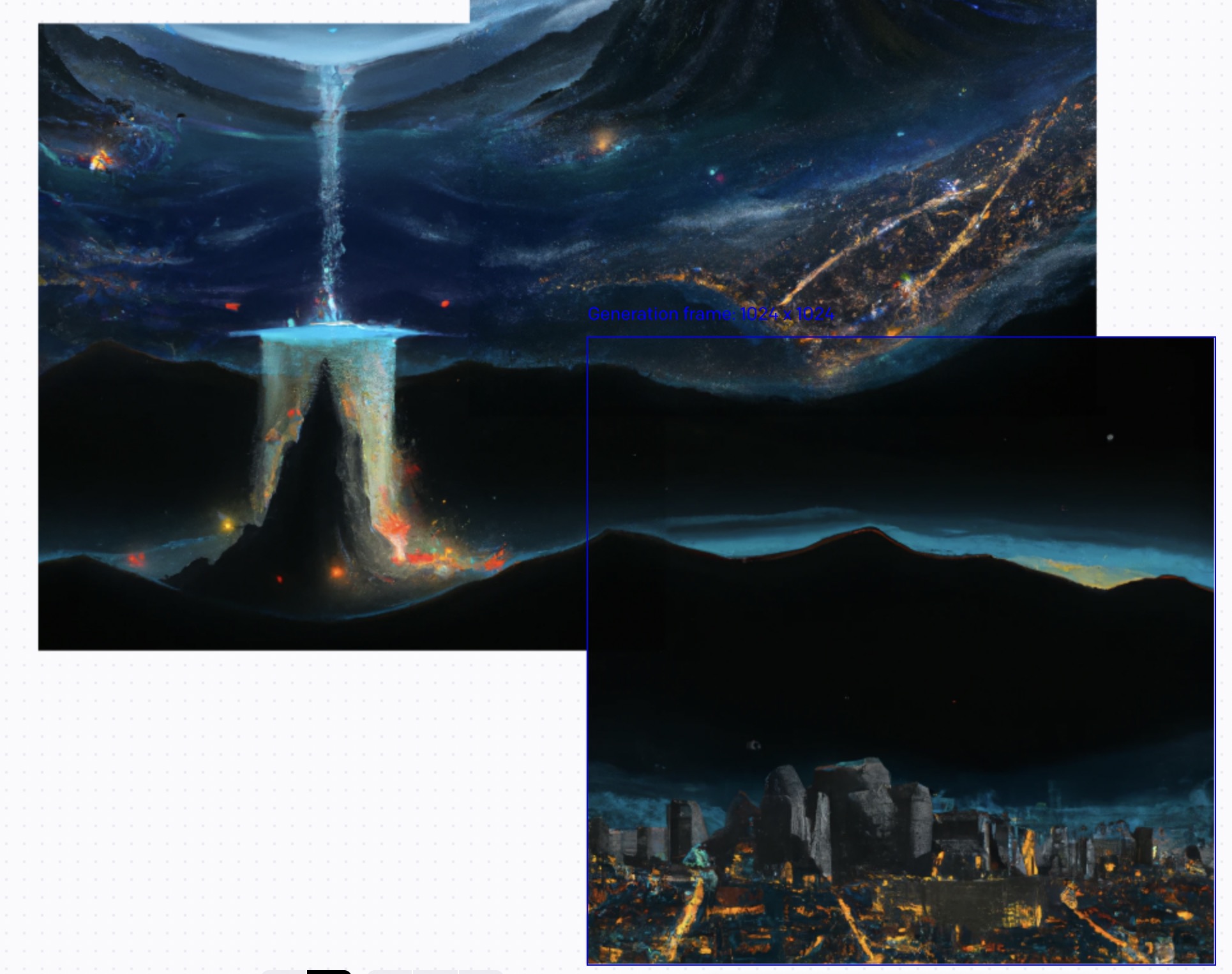

But for the moment, here’s the final Discworld image that I settled on from my Outpainting session:

If you create any awesome Discworld images (or any kind of brilliant image for that matter) do let me know in the comments below since I’d love to see them 🙂

And if you’re interested in seeing some fantastic implementations of Outpainting, and have not already seen the announcement blog post from OpenAI, you might want to go here and see how they expanded upon “Girl with a Pearl Earring”.