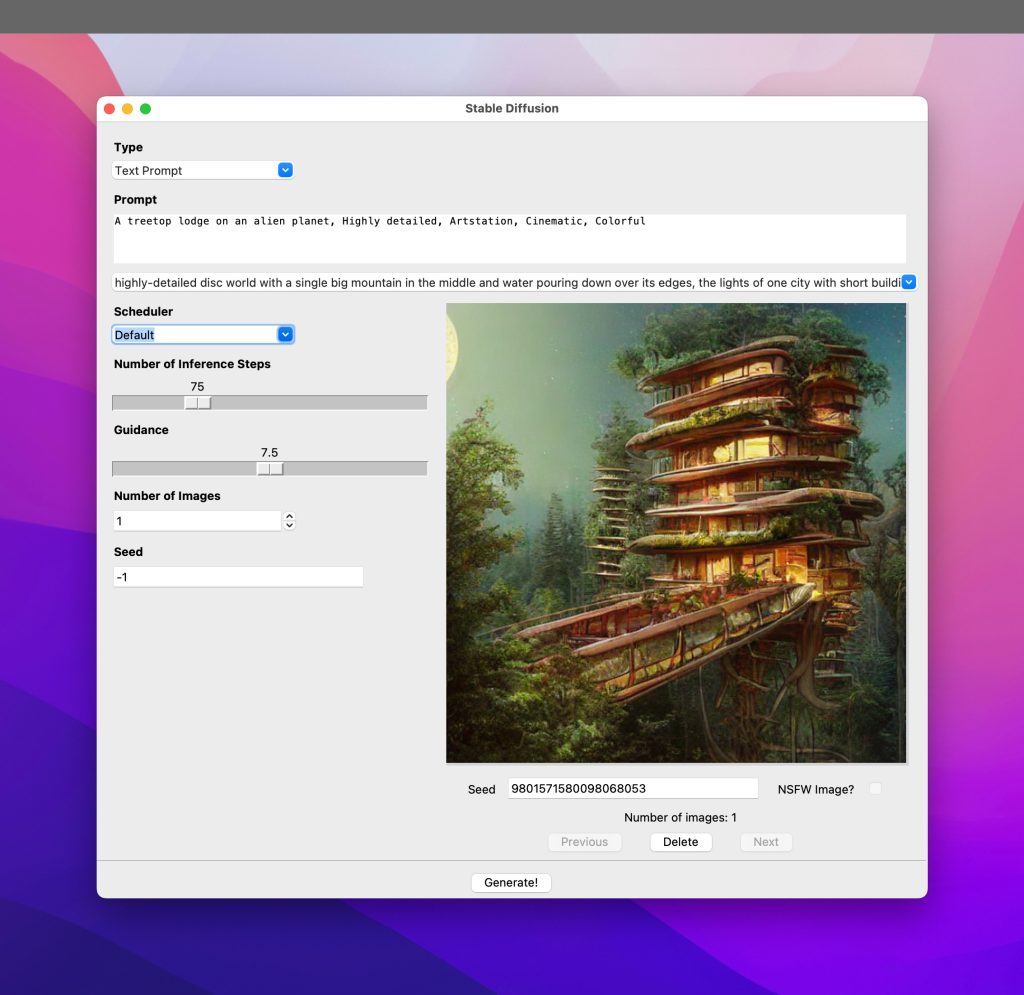

As I’ve written previously, I’ve been working on a GUI for Stable Diffusion since I prefer working with a simple UI that allows me to do everything in one place. The trouble with that is that I don’t get as much time for doing other Stable Diffusion development since I only get to do any of this work in my spare time and on weekends.

One thing that I had been meaning to look into was that my Stable Diffusion image generator wasn’t working correctly with the seed function. Basically, each image generated by Stable Diffusion uses a random seed, which is just a random integer. If you generate a new image using the same prompt and seed, you’ll get the exact same image from Stable Diffusion again.

At least, that was the theory 🙂

My original code for working with Stable Diffusion did not have the random number feature, nor did it support entering your own random number. But I added that with the second iteration of the GUI.

Note: If you like the GUI below and would like to use it, you can get it here:

https://github.com/FahimF/sd-gui

Instructions for installing are there as well but if you are not familiar with the comand-line or Python, it can be a bit of work 🙂 I did consider writing a script which would simplify the work for people, but there are so many variables in play, that I’m not not sure it would be an easy task.

As you’ll note in the above screenshot, there are two seed fields. The one on the left is for you to enter the seed before you generate a new image. The seed value under the image is the random seed for the image above and if you copy that number and plug that into the “Seed” box in the left and generate a new image, you should get the same image again.

Unfortunately, this did not work correctly on Apple Silicon devices. Turns out that this was due to this bug in PyTorch. That issue thread also has a workaround for the issue. So I used the workaround to get the seed functionality working on Apple Silicon and I was finally able to reliably generate an image from the same prompt using the same seed!

Once I got this functionality in place, I noticed something — while the image for the same prompt with the same seed was mostly the same, if you changed the scheduler (see screenshot above — notice the Scheduler dropdown?) the resulting image is not *exactly* the same …

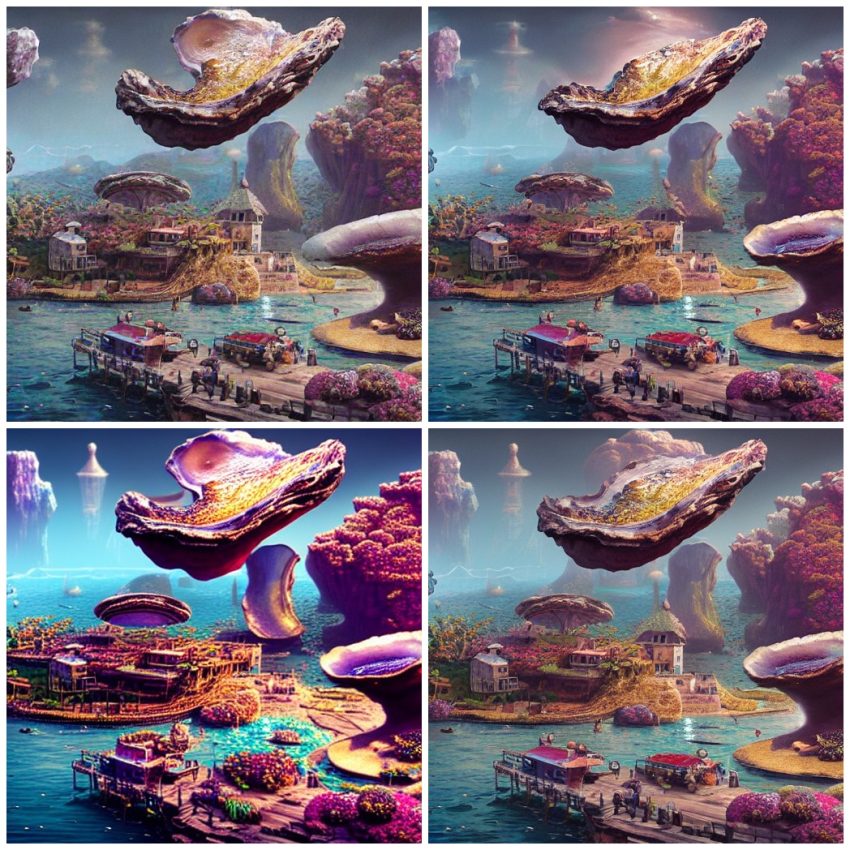

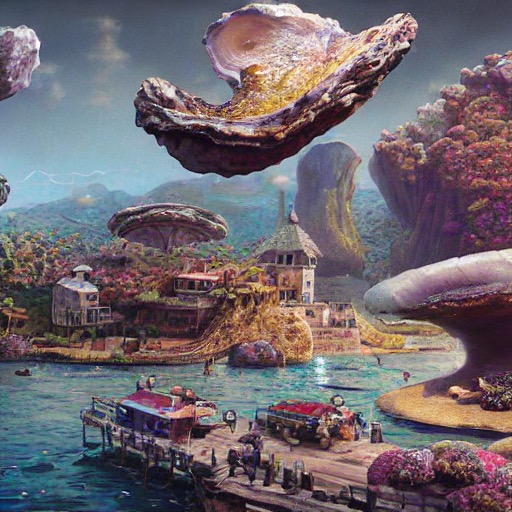

This led to some interesting effects. My app allows you to use the default scheduler in Stable Diffusion, or to use three custom schedulers — LMS, PNDM, and DDIM. (Check the Hugging Face diffusers documentation for each of these schedulers and further details.) Here’s what I got when I generated the same image using each of those scheduler options:

Do you notice the differences? I find the variations in image elements and the changes in image quality really interesting! I want to do more images with the same seed and look at the variations in more detail, but I guess that’ll have to wait since I’m busy working on another variant of the GUI 😄

But I’ll talk more about the new GUI in another post … if I can find the time to write a post in between working on the GUI 😛